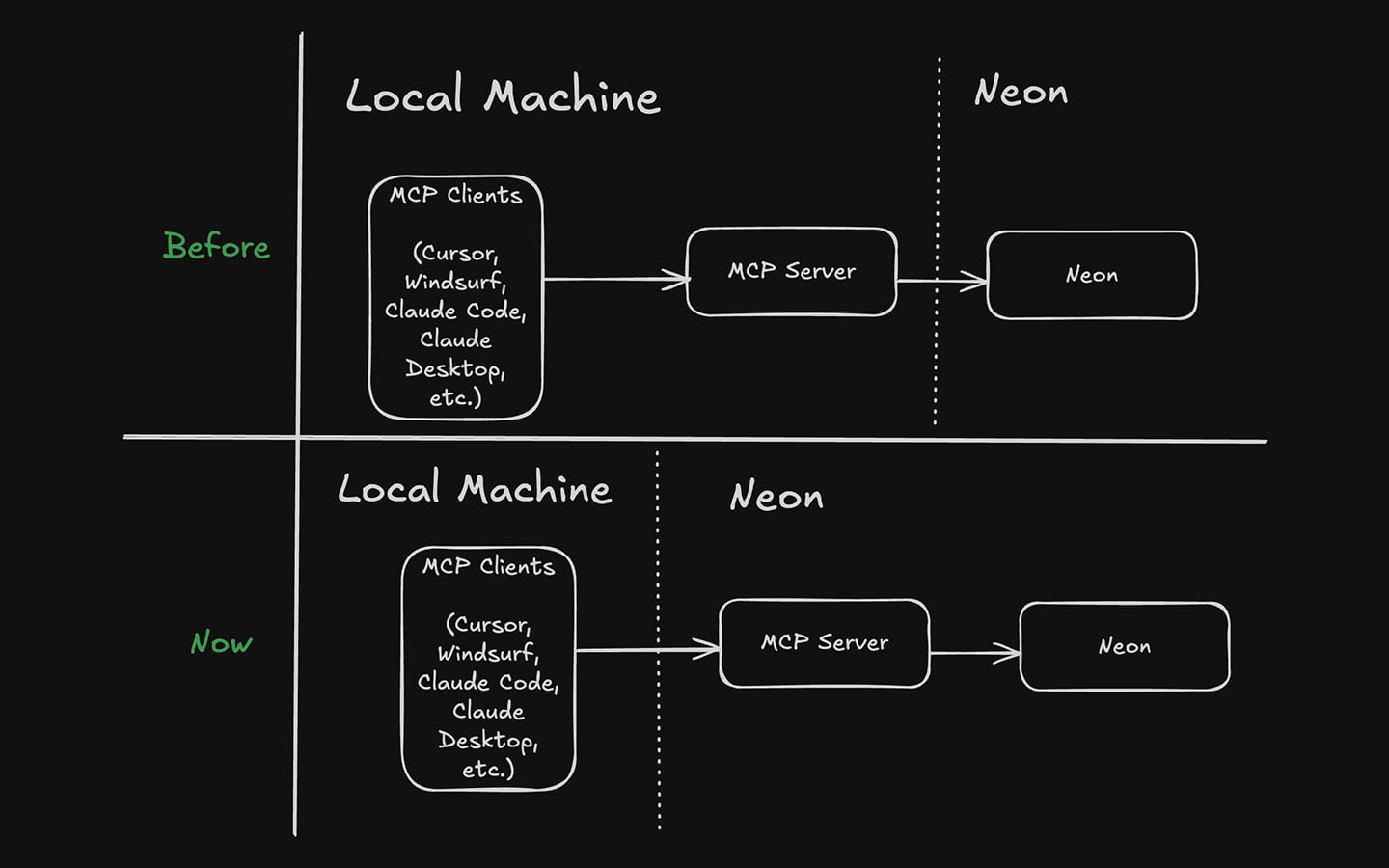

At Neon, we like to be on top of things, especially when it comes to AI. We first announced our MCP server back on December 3rd, 2024. That was a long time before MCP really took off, and we’ve been iterating on our MCP server’s capabilities ever since. You can read more about our MCP server in our docs.

As such, we’ve been closely following the Model Context Protocol specification and waiting anxiously for the protocol to be ready for remote hosting. Today, we’re pleased to announce that we’ve got a hosted version of our MCP server ready for our users to use.

A remote MCP Server greatly simplifies setting it up through any client such as Cursor or Windsurf, without having to create API keys in our service. Furthermore, as we add new features to our MCP server, our users will automatically get them without having to upgrade their local setup.

How to use Neon’s remote MCP Server

Because the MCP specification for OAuth is still very new, we’re launching this under a preview state. It’s likely that we have to make some changes to the setup and things might break in unexpected ways during the first few weeks. Nevertheless, the following instructions should be simple to try today.

- Go to your MCP Client’s settings and register a new MCP Server

- As an example, if you’re using Cursor, add the following to the “MCP Servers” configuration in the “Cursor Settings”:

"Neon": {

"command": "npx",

"args": ["-y", "mcp-remote", "https://mcp.neon.tech/sse"]

}That’s it, our hosted MCP Server is running at https://mcp.neon.tech.

Here’s a video of everything from start to finish using Windsurf:

If you’re looking for instructions for different clients, here they are:

Note that these instructions might still refer to the self-managed version of our MCP Server, but in time they will all be updated to have both methods.

How is it built?

Well, everything is open-source of course. Soon, we’ll be writing more about how we implemented this. For now, you can refer to the source code that’s available on GitHub here. The pull request with the bulk of the work can be found here. Notice that for now, we’re using the geelen/mcp-remote Node package to make this all work (this will almost definitely change in the near future).

The MCP Server communicates with clients via SSE (Server-sent events), and it can be deployed on many different cloud providers.

What’s next?

Our remote MCP Server is just the beginning. As the MCP specification evolves, we’re committed to refining and expanding our offering to provide a reliable experience for developers. By bringing MCP to the cloud, we’re making AI workflows more accessible, scalable, and future-proof. We can’t wait to see what you build with it. Try it out today, and let us know your feedback on Discord—we’re listening.